This article is part of our collection at Banana Prompts, where you can explore high-quality Nano Banana prompts.

The "Luna Books" Moment: When AI Finally Learned to Read

For the last three years, the generative AI community has been locked in a fever dream of six-fingered hands, gibberish neon signs, and "photorealistic" portraits that looked like they were glazed in Krispy Kreme sugar syrup. We accepted the surrealism because the magic was intoxicating. We forgave MidJourney for spelling "Coffee" as "Covfeefe" because the lighting was cinematic. We forgave Stable Diffusion for turning a crowd of people into a Cronenbergian nightmare because it was open-source and ran on our gaming PCs.

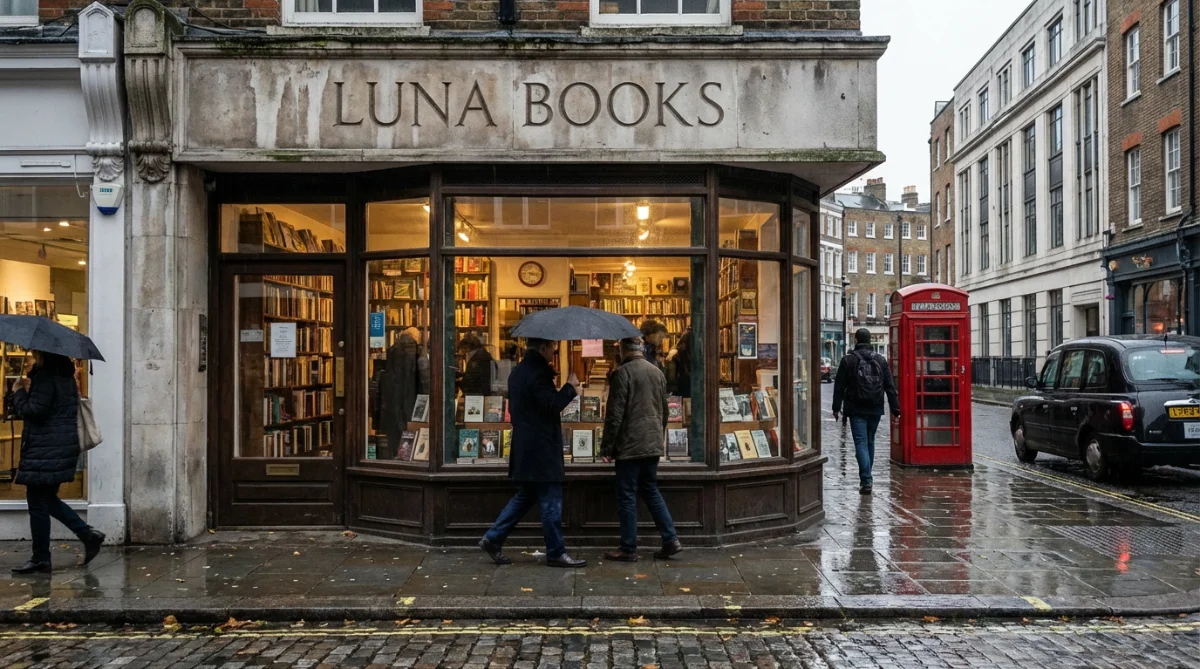

But then, the "Luna Books" image dropped.

It wasn't the most artistic image ever generated. It wasn't a sprawling cyberpunk cityscape or a baroque oil painting of a frog king. It was a simple, boring photograph of a bookstore storefront. But it was terrifyingly competent. The sign above the door read "Luna Books"—not "Lura Boks" or "Luuna Boooks." The font was etched into the stone facade with correct physical depth. The lighting wasn't the dramatic "God rays" of a fantasy render; it was the flat, believable, slightly overcast lighting of a Tuesday afternoon in London. The people in the reflection of the window looked like actual pedestrians, not melted wax figures.

This was the quiet arrival of Nano Banana Pro.

While the internet was busy arguing about video generators and 3D splats, Google DeepMind released a model that didn't just "generate" pixels—it seemingly understood them. Built on the back of the Gemini 3 Pro system, Nano Banana Pro (often codenamed "NB2" or "Gemini Image 3" in the technical backwaters) represents a fundamental shift in how we talk to machines. It is no longer about rolling the dice and hoping for a happy accident. It is about "Thinking" models—systems that reason about layout, spelling, and physics before they ever lay down a single pixel.

In this deep dive for BananaPrompts.fun, we are going to strip away the corporate press releases and get into the messy, glorious reality of this model. We’ll look at why it’s currently the king of "logic" art, why it sometimes feels like a strict librarian compared to MidJourney’s wild artist, and how you—yes, you—can drive this thing to create images that are shockingly, disturbingly real.

1. What Is Nano Banana Pro? (And Why the Weird Name?)

1.1 The Architecture of "Thinking"

To understand why Nano Banana Pro feels different, you have to understand the engine block. Most AI image generators we’ve grown to love—Stable Diffusion XL, MidJourney v6—are primarily diffusion models driven by direct text encoders. You type "cat on a bike," the model turns those words into vectors, and then it tries to denoise a static field of fuzz until it sees a cat on a bike. It’s a stochastic process. It relies on probability. It doesn't "know" what a bike is; it just knows what pixels usually go near the word "bike."

Nano Banana Pro is different. It sits on top of Gemini 3 Pro, a massive multimodal Large Language Model (LLM). When you give NB Pro a prompt, it doesn't just start painting. It enters a "Thinking" phase.

During this split-second (or sometimes 15-second) pause, the model effectively has a conversation with itself:

- Decomposition: It breaks your prompt down. "Okay, they want a cat. They want a sunbeam. The sunbeam implies a light source. Where is the light coming from? The window. Therefore, the shadow must fall here."

- Fact-Checking: If you ask for a "1990s Game Boy," it can ping Google Search (in the enterprise versions) or access its vast internal knowledge to verify the button layout. It knows the A button is red and the B button is red (or purple, depending on the model context) before it generates.

- Layout Planning: It spatially maps the image. "The text 'Luna Books' needs to go on the sign. The sign is stone. Therefore, the text must follow the perspective of the stone."

This is why Google claims it offers "Studio-grade control". It’s not just generating; it’s constructing.

1.2 The Branding: From Meme to Machine

The name "Nano Banana" is a classic piece of tech culture whiplash. The original Nano Banana 1 was Google’s attempt to be "fun." It was fast, it made figurines, it did "mall pics," and it was designed to be meme-worthy. It was the AI equivalent of a Snapchat filter—delightful, ephemeral, and a little bit silly.

Nano Banana Pro (NB2) is the grown-up sibling who went to engineering school. The "Pro" tag isn't just marketing fluff; it signals a move to:

- Native 4K Resolution: No more blurry 1024x1024 squares. We’re talking 4096x4096 production-ready assets.

- Reference Consistency: The ability to look at 14 different photos and say, "Okay, draw this guy in that style," without morphing him into a different person.

- Safety & Watermarking: The inclusion of SynthID, an invisible pixel-level watermark, because Google knows this thing is good enough to cause geopolitical incidents if left unchecked.

This duality creates a funny user experience. You’re using a tool with a fruit emoji in its name 🍌 to generate enterprise-grade infographics and photorealistic legal evidence.

2. The "Shockingly Real" Aesthetic: A New Kind of Uncanny

If MidJourney is a cinematographer like Roger Deakins—obsessed with atmosphere, rich color grading, and dramatic shadows—then Nano Banana Pro is a documentary photographer working for National Geographic or a forensic investigator.

2.1 The Death of "Vibes," The Birth of Physics

The most jarring thing about using Nano Banana Pro for the first time is how boring the lighting can be—in a good way. Ask MidJourney for a "kitchen," and you get a magical, dust-mote-filled fantasy kitchen with lights glowing from nowhere. Ask Nano Banana Pro for a "kitchen," and you get a kitchen. The fluorescent lights hum with a greenish tint. The shadows under the cabinets are pitch black because the light source is directional. The stainless steel fridge reflects the messy counter opposite it.

This is "Context-Aware Reconstruction". The model understands the physics of light transport.

The "Golden Hour" Test: In a user test modifying a landscape photo, NB Pro didn't just tint the image orange. It moved the sun. It elongated the shadows across the gravel. It changed the reflectivity of the water to match a low-angle light source. It "rebuilt the scene's illumination" rather than applying a filter.

2.2 Texture and the "Plastic" Problem

One of the biggest complaints about AI art (especially from Stable Diffusion XL and DALL-E 3) is the "plastic skin" effect. Everyone looks like they’ve had a chemically aggressive facial peel.

Nano Banana Pro makes a massive leap here, but it’s not perfect. It excels at inorganic textures: stone, wood, metal, glass.

User Report: A designer generated a "luxury bracelet on a marble surface." The model rendered the marble with subsurface scattering and correctly etched a logo into the stone, respecting the grain.

However, when it comes to humans, it can still fall into the "Gloss Trap."

The Critique: In the "One Prompt Face-Off," while NB Pro won on logic, critics noted that the people looked "a bit too smooth and perfect, more like a polished stock photo than a gritty travel snap". It lacks the pores, the peach fuzz, and the chaotic imperfections of a RAW camera file that MidJourney v6/v7 has mastered. It’s "Commercial Real," not "Human Real."

2.3 The "Homunculus" Fix

We have to talk about anatomy. For years, we’ve been playing "count the fingers." Stable Diffusion is notorious for "body horrors"—twisted spines, extra legs, and faces that melt into the background.

Because of its "Thinking" phase, NB Pro seems to have a skeletal understanding of subjects.

The Crowd Scene: In the "Luna Books" test, it generated a crowd of travelers. They all had heads. They all had limbs attached to the correct sockets. They were interacting with the environment, not clipping through it.

Consistency: It maintains identity across poses. If you generate a character and then ask for a side profile, it rotates the 3D mental model of that character rather than generating a new person who vaguely looks similar.

3. The Text Revolution: Why "Luna Books" Matters

This is the killer feature. This is why designers are cancelling their stock photo subscriptions.

3.1 The Technical Hurdle

Why was text so hard for AI? Because to a diffusion model, the letter "A" isn't a semantic symbol; it's just a collection of lines, like a twig or a crack in the pavement. The model didn't know how to write; it just knew what writing looked like.

Nano Banana Pro decouples the "concept" of text from the "image" of text. It uses a separate weight set for language representation. When you ask for the word "Coffee," the LLM component says, "Okay, construct the glyphs C-O-F-F-E-E," and the image component says, "Got it, I’ll render them in neon on a brick wall."

3.2 Typography in the Wild

The results are startling.

- Logos and Branding: You can generate a "minimalist coffee shop logo on a kraft paper cup," and the text will be legible, centered, and follow the curve of the cup.

- Multilingual Mastery: It’s not just English. The model supports Arabic, Chinese, and Japanese. A user successfully generated a menu with Japanese pricing and distinct kanji characters that were visually coherent.

- Infographics: This is the most "Google" feature imaginable. You can generate a chart. A real chart. With axes that are labeled. With bars that correspond to the numbers you provided (or that it searched for). No more hallucinatory data viz that looks pretty but means nothing.

3.3 The "Secret" to Perfect Text

The secret, according to power users, is specificity. You can't just say "some text." You have to treat the prompt like a design brief.

Prompt Tip: "Signage reading 'BANANA' in bold, sans-serif, white Helvetica font, kerning 10px, centered on the glass door." The more specific you are about the typography, the better NB Pro performs.

4. The Battle Royale: Nano Banana Pro vs. The World

How does it stack up against the heavyweights? Let's break it down in a way that helps you decide which subscription to keep.

4.1 Comparison Table: The Specs

| Feature | Nano Banana Pro | MidJourney v7 | Stable Diffusion (SDXL/Flux) | DALL-E 3 (ChatGPT) |

|---|---|---|---|---|

| Vibe | The Smart Designer | The Art House Director | The DIY Engineer | The Helpful Intern |

| Text Rendering | SOTA (94% Accuracy) | Improved, still glitchy | Getting better (Flux), but hard | Good, but hallucinates |

| Realism | Documentary / Stock Photo | Cinematic / Painterly | Highly Variable (Model dependent) | Plastic / Digital Art |

| Logic/Reasoning | High ("Thinking" Mode) | Medium (Vary Region) | Low (Needs ControlNet) | Medium (GPT-4 refined) |

| Reference Images | Up to 14 (Identity Keep) | Style References | Unlimited (LoRA/ControlNet) | Limited |

| Resolution | Native 4K | Upscale required | Variable | 1024x1024 |

| Cost | Freemium (Google One) | $10-$120/mo | Free (Requires GPU) | $20/mo (Plus) |

| Censorship | High (Safety Rails) | Moderate | Low (Uncensored) | High |

4.2 Vs. MidJourney: The Soul vs. The Brain

MidJourney is still the king of beauty. If you want an image that makes you feel something, use MidJourney. It has a "secret sauce" of aesthetics—a tendency to add dramatic fog, emotional lighting, and complex textures that simply look gorgeous.

Nano Banana Pro is the king of utility. If you need a picture of a "blue 2024 Toyota Camry parked in front of a specific bakery with a sign that says 'Open' and a cat in the window," MidJourney will give you a beautiful painting of a car that looks kind of like a Camry in a fantasy village. Nano Banana Pro will give you the exact car, the exact sign, and the exact cat.

Takeaway: Use MJ for your album cover. Use NB Pro for your slide deck or ad campaign.

4.3 Vs. Stable Diffusion: The Walled Garden vs. The Wild West

Stable Diffusion (especially with the new Flux models or Z-Image-Turbo) is for the power user who wants to own the pipeline. You can run it locally. You can train a LoRA on your own face. You can use ControlNet to pose a skeleton exactly how you want. But it is hard. It requires Python scripts, GPU VRAM, and patience.

Nano Banana Pro is "Stable Diffusion for people who have jobs." It abstracts all that complexity into a conversation. You don't need ControlNet; you just ask, "Move the cup to the left."

The Speed Factor: A Reddit user noted that "Z-Image-Turbo" is insanely fast and open-source, but Nano Banana Pro offers that "polished artistic style" and logic without the setup time. However, privacy advocates will always prefer SD because Google is watching your prompts.

4.4 Vs. DALL-E 3: The Smart vs. The Smarter

DALL-E 3 was the first to really integrate LLMs (via ChatGPT) to rewrite prompts. But DALL-E 3 has a very specific "look"—it tends to be cartoony, smooth, and vibrant. Nano Banana Pro feels like a generational leap over DALL-E 3 in terms of photorealism and text accuracy. In the "One Prompt Face-Off," DALL-E 3 nailed the text but failed the vibe, looking too glossy. NB Pro nailed both.

5. The User Guide: How to Drive the Banana

You’ve got access (likely via Gemini Advanced or a free trial). How do you get the good stuff?

5.1 The "Thinking" Mode Prompt Structure

Forget the "4k, 8k, trending on artstation, masterpiece" word salad. That was for the old dumb models. Nano Banana Pro wants you to talk like a director.

Structure: [Subject] + [Action] + [Details] + [Lighting/Camera] + [Style]

Example Prompt (The Impossible Shot): "A macro shot of a vintage mechanical watch mechanism. The gears are brass and steel. Engraved on the main gear is the text 'TEMPUS FUGIT' in tiny, precise serif letters. Lighting is dramatic, coming from the side to highlight the scratches on the metal. High contrast, shallow depth of field, focus on the engraving."

Why this works: You gave it material physics (brass/steel), specific text ('TEMPUS FUGIT'), and camera instructions (macro, shallow depth of field). The "Thinking" model will lock focus on the engraving because you told it to.

5.2 The "Conversation" Edit

This is where NB Pro flexes. You don't have to get it right on the first try.

Generate: "Create a modern living room with a beige sofa." Refine: "Make it night time. Turn on the floor lamp. Change the sofa to navy blue velvet." Iterate: "Add a dog sleeping on the rug. Make it a Golden Retriever."

The model maintains the "seed" of the room. It doesn't regenerate a random new room; it re-lights the existing room. This "Multi-turn Conversational Editing" is unique to the Gemini integration.

5.3 The Restoration Trick

Got an old photo of your grandparents? Scan it. Upload it to Gemini.

Prompt: "Restore this old photograph. Fix the tears in the corner. Colorize it accurately for the 1950s—muted pastels. Sharpen the faces but keep the film grain to make it look authentic. Do not make the skin look plastic."

The "context-aware reconstruction" will rebuild the missing corner of the room based on the rest of the image. It’s magic.

6. The Quirks, Risks, and The "Jim Carrey" Problem

It’s not all sunshine and perfect kerning. There are shadows here.

6.1 The Safety Filter Struggles

Google is terrified of bad press. As a result, NB Pro is heavily lobotomized when it comes to public figures. You cannot ask for "Joe Biden eating a hot dog." You cannot ask for "Taylor Swift in a mech suit."

The Workaround (and the risk): Clever prompters have found that if you describe a person perfectly without naming them, the model will generate them.

Snippet S3: A user generated a scene from The Grinch with Jim Carrey’s exact likeness just by describing the makeup and the scene, omitting the name "Jim Carrey."

This "Privacy by Obfuscation" is a flimsy shield. It proves the model knows what these people look like; it’s just being told not to show you.

6.2 The Brand Block

Try to generate a Coke can. You might get a red can that says "Cola." Try to generate Mickey Mouse. You’ll get a "generic round-eared rodent." Google Ads users have noted that this makes it hard for brand campaigns unless you upload your own reference images as the source of truth.

6.3 The "Micro-Artifacts"

Zoom in. Closer. Closer.

At 300% zoom, the illusion breaks. You’ll see "fused finger joints." You’ll see that the texture of the denim jeans repeats in a weird, mathematical pattern. You’ll see that the reflection in the eye doesn't quite match the window.

The Verdict: It’s 95% perfect. But that last 5% is the difference between a photo you can put on a billboard and a photo you can put on Instagram. For high-end print, you still need Photoshop.

7. The Future: Agents and The Death of "Prompting"

Nano Banana Pro is just the beginning. The integration with Gemini Agents suggests a future where we won't "prompt" at all.

Imagine this workflow, hinted at in the Google Workspace snippets:

User: "Gemini, look at my Q3 sales spreadsheet. Create a slide deck summarizing the growth. Generate a cover image showing a rocket taking off from a graph that matches our data trend. Use our corporate color palette (Blue #0055AA). Send it to the marketing team."

NB Pro isn't just an artist; it's an employee. It’s an infrastructure layer for the creative economy. It’s coming for the stock photographers, the layout designers, and the retouchers.

But for now? It’s the most fun you can have with a text box. It’s the tool that finally lets you get the image out of your head and onto the screen, exactly as you imagined it—spelling errors not included.

Tweetable Takeaway 🐦

"Nano Banana Pro isn't just an art generator; it's a reality engine. With Gemini 3's brain, it solves the 'AI text' problem, nails photorealism, and lets you edit with conversation. It's the 'Photoshop' of the AI era—boring enough to be useful, scary enough to be real. 🍌🤖 #NanoBananaPro #AIArt #Gemini3"

Appendix: 3 "Nano Banana" Prompts to Try Tonight

-

The "Etsy Shop" Mockup Prompt: "A flat-lay photograph of handmade organic soaps on a rustic wooden table. Dried lavender sprigs scattered around. A brown kraft paper label on the soap reads 'SUNDAY MORNING' in typewriter font. Soft, natural window light from the left. 4K, highly detailed texture."

-

The "Cyberpunk Street Food" Prompt: "Night time street food stall in Neo-Tokyo. Rain slicked pavement. The chef is a robot with a transparent head. Smoke rising from the grill. Neon sign above reads 'NANO NOODLES' in bright blue katakana and English. Cinematic lighting, teal and orange color grading."

-

The "Historical remix" Prompt: "A 1920s black and white news photograph of a dinosaur walking down 5th Avenue in New York. Crowds of people in period-accurate 1920s hats and suits looking shocked. Grainy film texture, motion blur on the dinosaur, authentic historical vibe."

Explore more high-quality Nano Banana prompts at Banana Prompts.